In the Monte Carlo method, one uses the idea that not all parts have the same dimensions, yet a normal distribution describing the variation of the part dimensions is not assumed.

Although the normal distribution does commonly apply, if the process includes sorting or regular adjustments or if the distribution is either clipped or skewed then the normal distribution may not be the best way to summarize the data.

As with any tolerance setting, getting it right is key for the proper functioning of a product. Monte Carlo method allows you to consider and use the appropriate models for the variations that will exist across your components.

The Monte Carlo method uses the part variation information to build a system of randomly selected parts and determine the system dimension. By repeating the simulated assembly a sufficient number of times, the method provides a set of assembly dimensions that we can then compare to the system tolerances to estimate the number of systems within a specific range or tolerance.

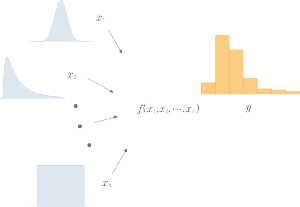

In short, we randomly select part dimensions and apply a transfer function (to simulate how the parts combine to create the final dimension) to create the resulting assembly distribution of dimensions.

The resulting distribution will be a normal distribution when all of the input part dimensions are normally distributed. Also, if there are a large number of parts in the stack, the result is also likely to be a normal distribution. For a few parts with other than normal distribution of dimensions, the result will likely not be normally distributed.

Number of Simulation Runs

For a simulation to produce consistent results, it should use the number of runs that allow the result to converge with some defined margin. Commercial Monte Carlo software packages monitor the results after each run to determine the convergence and stop when the value remains within a pre-specified range.

One way to estimate the number of runs requires a two-step approach.

First, run the simulation for 1000 runs.

Calculate the standard deviation of the resulting values.

Then use the estimated standard deviation to estimate the number of runs m needed to achieve a desired sampling confidence with 1% accuracy:

$$ \large \displaystyle m={{\left( \frac{{{z}_{{}^{\alpha }\!\!\diagup\!\!{}_{2}\;}}\times \sigma }{Er\left( \mu \right)} \right)}^{2}}$$

where Zα/2 is the standard normal statistic for a two-sided C confidence, where α = (1 − C), with C being the statistical sampling confidence, commonly set to 95%, and Er(μ) is the standard error of the mean and is related to the amount of variation of the estimated mean.

Dividing the numerator and denominator by μ converts the terms to percentages:

$$ \large \displaystyle m={{\left( \frac{{}^{{{z}_{{}^{\alpha }\!\!\diagup\!\!{}_{2}\;}}\times \sigma }\!\!\diagup\!\!{}_{\mu }\;}{{}^{Er\left( \mu \right)}\!\!\diagup\!\!{}_{\mu }\;} \right)}^{2}}$$

Thus, Er(μ)/μ is the percentage accuracy (convergence threshold), and σ/μ is the relative standard deviation.

Simple Example

Step 1: Define the problem and the overall study objective.

What is the appropriate tolerance for individual plates used in a stack of five to achieve a combined thickness of 125 ± 3 mm?

Given the variation of the plate thicknesses, how many assemblies will have thicknesses outside the desired tolerance range?

Step 2: Define the system and create a transfer function that defines how the dimensions combine: y = f(x1, x2, …, xn).

Assuming no bow or warp in the plates, we find that the stack thickness of five plates is simply the added thicknesses of each plate: y = x1 + x2 + x3 + x4 + x5.

Step 3: Collect part distributions or create estimated distributions.

In this simple example, the five plates are the same and from the same population have a mean value of 25 mm and standard deviation of 0.33 mm.

Step 4: Estimate the number of runs for the simulation.

The five plates have normally distributed thicknesses of 25 ± 0.99 mm (a result that should converge to the same result as if using the RSS method).

Instead of setting up and running the Monte Carlo simulation to estimate the standard deviation we can use the calculated standard deviation for the combined five plates of σ = 0.7379.

Let’s use a confidence of 95%; thus, α = 1 − C = 1 − 0.95 = 0.05. Let’s conduct sufficient simulation runs to have an accuracy of 1%.

We have Zα/2 = 1.645 (in Excel = NORMSINV(0.95)), and σ/μ = 0.7379/125 = 0.0059, and Er(μ) is set at 0.01 or 1%, which means Er(μ)/μ is 0.01/125 = 0.00008.

The number of required runs, m, is then 121.385^2 = 14,734.306.

Creating 15,000 runs with Excel may be difficult, if even possible.

Thus you may require the use of a Monte Carlo package such as Crystal Ball (an Excel add-on) or 3DCS Variation Analyst within SolidWorks. R software is another option.

Step 5: Generate a random set of inputs.

For each of the five parts in the example, draw at random a thickness value from the normal distribution with a mean of 25 mm and a standard deviation of 0.33 mm. If you are using Excel the thickness for one plate can be found with =NORMINV(RAND(),,). Do this for each part in the stack.

One run set of inputs may result in 25.540, 25.008, 24.565, 24.878, and 24.248 mm.

Step 6: Evaluate the inputs.

Use the input values in the transfer function to determine the run’s result. In this example, we add the five thickness values to find the stack thickness. The sum of the five thicknesses for one run in Step 5 results in 124.238 mm.

Step 7: Repeat Steps 5 and 6 for the required number of runs (Step 4) and record each run’s result.

Step 8: Analyze the results using a histogram, confidence interval, best-fit distribution, or another statistical method.

When all the input distributions are normal distributions, the resulting distribution will also be normal. For the five plates, the result is a normal distribution with a mean of 125 mm and standard deviation of 0.7379 mm.

As with the RSS method, we can then calculate the percentage of assemblies that have dimensions within 125 ± 2 mm. We find that 99.33% fit within the defined tolerance when each plate is 25 ± 0.99 mm.

We can explore changing the part tolerance, if supported by manufacturing processes, to determine the impact on final tolerance.

Best Practices and Assumptions

If you assume or actually have part dimensions that can be described by the normal distribution, the Monte Carlo method results will equal the RSS method results.

If uncertain of the part dimension tolerance, use a uniform distribution. If you suspect the distribution to be centered within the tolerance range for the part, then use the triangle distribution.

Preferably, you should use an estimate of the actual distribution of part variation based on measurements.

When that is not possible, then use engineering judgment and manufacturing process knowledge to estimate the part variation distribution.

Vendors may have measurements of similar parts that may provide to be a suitable estimate for the distribution values.

Ask a question or send along a comment.

Please login to view and use the contact form.

Ask a question or send along a comment.

Please login to view and use the contact form.

Why does the standard error formula not apply here? If I’m stacking 5 plates, I’d expect the standard deviation of the stack to be 1/sqrt(5) times the s.d. of the individual plate population. If the s.d. of the plate population is 0.33mm, I’d expect the s.d. of the stack to be about 0.08mm. Could you please explain. Thank you.

Recall that we cannot mathematically manipulate standard deviations. We have to work with variances. Plus, the variation of a stack is going to larger than not smaller than the variation of an individual within the stack.